What Is Zero-Shot Transfer? Understanding Its Impact on Machine Learning. Discover zero-shot transfer & its role in machine learning. Learn how this innovative technique can enhance AI models without prior training!

Understanding Zero-Shot Transfer in Machine Learning

Defining Zero-Shot Transfer: Mechanisms & Functionality

Zero-shot transfer enables models to make predictions for tasks not encountered during training. It leverages information from related tasks. This technique depends on semantic relationships between tasks & easy concept transfer.

Models are designed to generalize learned knowledge. They apply it to new, unseen categories without additional training. Importantly, zero-shot learning minimizes data requirements for diverse tasks.

The Evolution of Zero-Shot Transfer: A Historical Perspective

Over the years, machine learning has evolved rapidly. Early methods focused on supervised learning with labeled datasets. Researchers identified limitations in training when data is scarce. As a response, zero-shot learning emerged in academic circles. Significant advancements in natural language processing boosted its popularity.

The introduction of embeddings & semantic understanding provided new avenues. Consequently, zero-shot transfer gained traction in fields such as image recognition & natural language understanding.

Implementing Zero-Shot Transfer: Practical Steps for Success

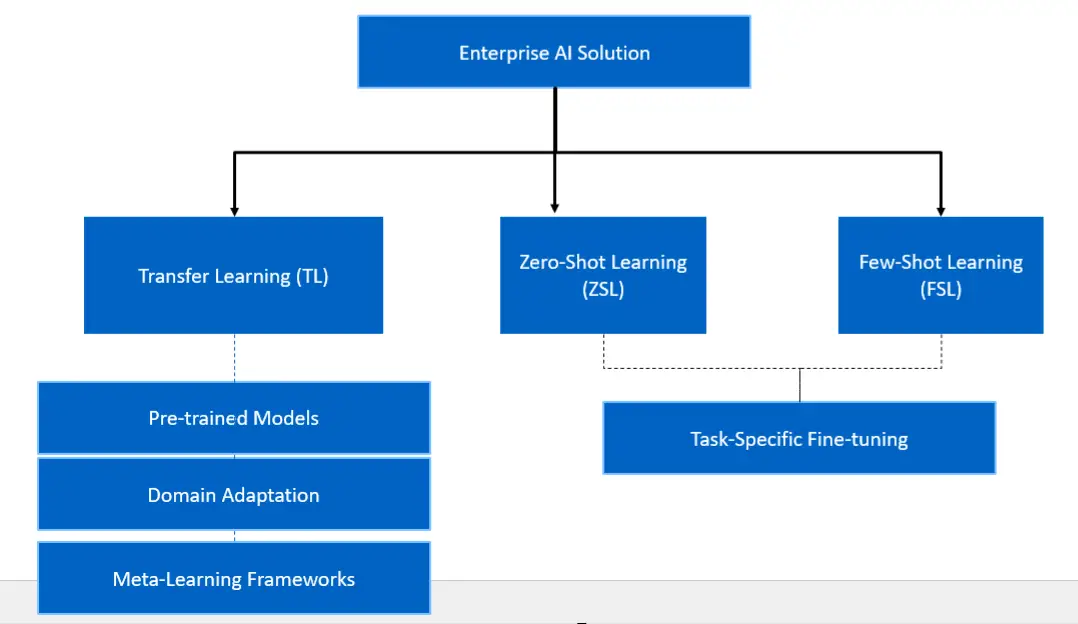

Organizations can adopt zero-shot transfer through structured approaches. First, selecting a robust pre-trained model stands crucial. Models such as BERT & GPT-3 excel in semantic understanding. Next, ensure proper alignment of tasks with learned representations.

Utilizing descriptive labels aids the model’s performance. And another thing, consider leveraging feedback loops for continuous improvement. Fine-tuning pre-trained models can enhance their effectiveness in specific domains.

Exploring the Advantages: Why Zero-Shot Transfer Stands Out

Zero-shot transfer offers numerous advantages for machine learning practitioners. It significantly reduces data collection efforts. Consequently, teams can save resources & time. On top of that, this technique allows for rapid adaptation to new tasks.

Models demonstrate versatility across various domains. And another thing, it supports exploration in niche areas with limited data availability. Overall, zero-shot transfer enhances model robustness & efficiency.

Navigating Challenges: Solutions to Zero-Shot Transfer Issues

Despite its advantages, implementing zero-shot transfer presents challenges. Data representation remains critical for model performance. If semantic relationships are weak, predictions falter. And another thing, ambiguity in tasks can confuse models.

One solution involves refining task descriptions. Clear, concise labels enhance understanding. Collaborating across teams can also produce better datasets. Finally, ongoing research should address existing limitations in current methods.

Looking Ahead: Future Trends in Zero-Shot Transfer Technology

Future developments in zero-shot transfer show great promise. As models grow in complexity, their accuracy improves. Researchers continue exploring innovative architectures & techniques.

Recent advancements in generative models open new frontiers. This may lead to even broader applications in various industries. Enhanced understanding of contextual information will drive future progress. Zero-shot transfer will undoubtedly play a pivotal role in machine learning evolution.

Understanding Zero-Shot Transfer

Zero-shot transfer is a significant concept in machine learning. It refers to the ability of a model to apply learned skills to new, unseen scenarios without any additional training. This capability is crucial for enhancing the flexibility & applicability of various models in real-world applications.

In traditional machine learning, models typically require vast amounts of labeled data to achieve high performance. Be that as it may, zero-shot transfer significantly reduces this dependency, making it possible to utilize models in situations they have never encountered during training.

The zero-shot learning paradigm is particularly beneficial in situations where obtaining labeled data is difficult, expensive, or impossible. By leveraging knowledge from related tasks, models can effectively generalize their learned representations to new instances.

How Zero-Shot Transfer Works

Zero-shot transfer often involves the use of auxiliary information. This can include knowledge bases, semantic attributes, or other forms of contextual data. For instance, models can recognize unseen categories using associated attributes of known categories.

The idea is to create a bridge that allows the model to relate new tasks to those it has previously learned. This process typically involves embedding different domains into a unified space where relationships can be drawn across tasks.

During the transfer process, models derive features & patterns from known tasks. They then apply this understanding to new tasks. This can take various forms, such as mapping images to textual descriptions or converting language patterns.

Benefits of Zero-Shot Transfer

One of the primary benefits of zero-shot transfer is its efficiency in resource usage. It reduces the need for extensive datasets required for training, saving both time & costs.

Another significant benefit lies in its adaptability. Models trained under this paradigm can quickly adjust to new tasks, making them highly versatile. This feature is invaluable in domains that demand rapid responses.

And another thing, this approach enhances performance in scenarios with limited data. In many applications, collecting large datasets is impractical. Zero-shot learning mitigates this issue effectively.

Applications of Zero-Shot Transfer

The applications of zero-shot transfer span across diverse fields. In natural language processing, models can generate responses or translate languages without prior examples.

In computer vision, models can classify objects they have never seen, based on attributes learned from other categories. For example, a model trained on mammals could recognize a zebra based on its features, even without prior exposure.

Another area is robotics. Robots capable of zero-shot transfer can adapt to new tasks on the fly. This adaptability allows robots to perform efficiently in dynamic environments.

Challenges in Zero-Shot Transfer

Despite its advantages, zero-shot transfer faces numerous challenges. One major issue is the reliance on the quality of the auxiliary information. Poor or incorrect attributes can lead to misclassifications or faulty predictions.

On top of that, the degree of similarity between the known & unknown tasks plays a crucial role. If the gap is too wide, the model may struggle to generalize effectively, resulting in diminished performance.

And another thing, scaling zero-shot methods can be complicated. As new tasks emerge, maintaining the robustness of the learned representations is essential, requiring continuous refinement.

Zero-Shot Transfer in Natural Language Processing

In natural language processing (NLP), zero-shot transfer has gained significant prominence. Models can perform sentiment analysis, translation, & even content generation without direct examples.

For instance, generative models like GPT-3 effectively demonstrate zero-shot capabilities. They can generate coherent & contextually relevant text across various topics, even without specific prompts.

This adaptability allows businesses to implement NLP solutions swiftly, streamlining operations such as customer support & content creation without the usual setup time.

Real-World Examples of Zero-Shot Transfer

Several high-profile projects highlight the effectiveness of zero-shot transfer. For instance, OpenAI’s Codex demonstrates how coding tasks can be addressed without prior examples.

This technology enables developers to write code simply by describing the required functionality in natural language. Such capabilities have transformed software development, making it more accessible.

Another notable example is Facebook’s Seq2Seq model, which showcases translation tasks with zero-shot approaches. It can translate languages without having direct pairs available, relying instead on shared linguistic features.

Zero-Shot Transfer in Computer Vision

Within the realm of computer vision, zero-shot transfer is revolutionizing how models identify & classify images. Traditional models require extensive datasets to recognize objects accurately.

Conversely, zero-shot models leverage human-understandable attributes to classify unseen objects. For example, a model may identify a “spotted dog” even if it has never encountered that specific breed before.

This advancement provides numerous benefits for industries such as healthcare, automotive, & security, where rapid & accurate image recognition is vital.

The Future of Zero-Shot Transfer

The scope of zero-shot transfer continues to expand. Researchers are actively exploring methods to enhance accuracy & reduce the reliance on auxiliary information.

Integration with deep learning frameworks is likely to further influence this field. This will facilitate more sophisticated architectures capable of learning complex representations.

On top of that, as more sophisticated datasets become available, the potential for advances in zero-shot techniques will only increase. This scenario heralds a new era of machine learning, marked by unparalleled flexibility.

Key Concepts Related to Zero-Shot Transfer

- Zero-Shot Learning

- Generalization

- Transfer Learning

- Multi-Task Learning

- Embedding Space

Strategies for Implementing Zero-Shot Transfer

To successfully implement zero-shot transfer, several strategies can be adopted. One includes the development of well-curated attribute sets. These attributes should clearly describe the relationships between known & unknown tasks.

Another strategy focuses on refining the embedding space. By ensuring effective mapping between different modalities, the potential for transfer increases significantly. This can involve employing techniques like principal component analysis to capture essential features.

Building robust evaluation frameworks is equally crucial. These frameworks should assess model performance comprehensively, focusing on both known & unknown tasks to fine-tune the underlying methodologies.

Impact on Machine Learning Paradigms

The advent of zero-shot transfer is reshaping traditional machine learning paradigms. It promotes a shift towards more flexible models capable of operating in diverse environments.

This new paradigm encourages collaboration between different fields, facilitating the sharing of methodologies & insights. The result is a more integrated approach to problem-solving.

And don’t forget, the implications extend to areas like data science, where reducing the need for labeled data can significantly alter research trajectories. Automation & efficiency become achievable goals, enhancing productivity across industries.

Realizing Zero-Shot Transfer: Steps Forward

Achieving effective zero-shot transfer requires a structured approach. Firstly, establishing clear relationships is essential. By identifying how new tasks correlate with known tasks, the model’s adaptability improves.

Secondly, ongoing research to optimize both data accuracy & semantic relationships will bolster model performance. This investment in research drives innovation in technology deployment.

Lastly, fostering collaboration among researchers, developers, & industry stakeholders can yield meaningful insights. Sharing best practices & experiences leads to collective knowledge advancement.

Integrating Zero-Shot Transfer in AI Systems

Integrating zero-shot transfer into existing AI systems enhances their capabilities significantly. Companies focusing on AI advancement can leverage this approach to optimize existing algorithms.

Such integration can focus on augmenting current models, allowing them to generalize better across datasets. This potentially leads to reduced training times & costs.

On top of that, implementing scalable architectures ensures models remain effective as tasks evolve. Developing a modular approach may facilitate incorporating new tasks seamlessly.

Zero-Shot Transfer & Creativity

Zero-shot transfer holds implications for creativity in AI. By enabling the generation of previously unseen artistic styles or designs, models become valuable tools for creators.

For instance, a model trained with historical artistic styles can produce unique compositions that draw inspiration from multiple sources without direct examples. This capability fosters innovation in creative industries.

As a result, artists & designers can leverage these technologies to refine their work, exploring new avenues that would otherwise require extensive experimentation.

Evaluating Zero-Shot Transfer Performance

To gauge the success of zero-shot transfer, establishing robust performance metrics is essential. These metrics should consider both the accuracy & reliability of predictions.

Common evaluation methods include confusion matrices & precision-recall curves, providing a comprehensive view of model performance. Testing across various scenarios ensures that the model exhibits resilience in different conditions.

And another thing, continual assessment & refinement are necessary. This iterative process helps identify areas for improvement, facilitating a feedback loop that strengthens the model’s capabilities over time.

Zero-Shot Transfer & User Experience

- Enhanced Interactivity

- Personalized Recommendations

- Dynamic Content Delivery

- Improved Customer Engagement

- Real-time Feedback

Gaining Competitive Advantage with Zero-Shot Transfer

Incorporating zero-shot transfer can give organizations a significant competitive edge. By integrating this approach, companies can innovate faster, adapting to market changes readily.

This agility allows businesses to capture new opportunities, respond to customer needs, & stay ahead of competitors. Organizations embracing these advancements often experience enhanced performance & productivity.

And don’t forget, reducing dependency on vast labeled datasets simplifies model maintenance. Companies can allocate resources more effectively towards other critical operations.

Zero-shot transfer is revolutionizing machine learning by offering unprecedented flexibility in handling new tasks.

Building a Knowledge Base for Zero-Shot Transfer

Developing a solid knowledge base is fundamental for achieving zero-shot transfer. This knowledge base should encapsulate essential attributes across different tasks, serving as a foundation for model training.

Leveraging existing data & insights, organizations can create structured repositories. These repositories facilitate the organization of knowledge relevant to various domains, enhancing usability for machine learning applications.

As new tasks emerge, continuously updating this knowledge base ensures that models remain relevant & effective. This active engagement fosters an ecosystem of growth & learning.

Future Directions in Zero-Shot Learning

The future of zero-shot transfer lies in advanced research aimed at refining methodologies. Innovations in representation learning & distributed settings could revolutionize how we think about machine learning tasks.

And another thing, interdisciplinary approaches that merge insights from linguistics, cognitive science, & artificial intelligence will enhance the effectiveness of zero-shot methodologies.

Ultimately, as technology evolves, so too will the applications & implications of zero-shot transfer across various sectors.

| Specification | Zero-Shot Transfer | Traditional Transfer Learning | Few-Shot Learning |

|---|---|---|---|

| Definition | Ability to generalize knowledge to new tasks without any training examples. | Leverages pre-trained models to perform well on new tasks with fine-tuning. | Trains models on very few labeled examples from new tasks. |

| Training Data Requirement | No training samples needed for target task. | Requires substantial labeled data from the target task. | Requires a limited number of labeled data points. |

| Learning Mechanism | Relies on the underlying knowledge & generalization capabilities of models. | Relies on fine-tuning & adaptation of pre-trained models. | Utilizes few examples to learn the task-specific features. |

| Examples of Applications | Natural language processing tasks, image classification with novel categories. | Image recognition, NLP applications with specific domains. | Speech recognition, small data classification. |

| Flexibility | Highly adaptable to completely new tasks. | Less flexible, often requires re-training for new tasks. | Moderately flexible, capable with limited labeled data. |

| Performance in Data Scarcity | Excels in scenarios with little to no available data. | Poor performance when data is scarce. | Performs well in low-data scenarios but with some limitations. |

| Complexity of Implementation | Relatively complex due to varying task definitions. | Easier to implement with established methodologies. | Moderate complexity, depends on task similarity. |

| Computational Resources | Often requires high computing power for initial training. | Moderate resources needed for fine-tuning. | Lower resource requirements due to few examples. |

| Evaluation Metrics | Accuracy, precision, recall, F1 score on unseen tasks. | Standard metrics applicable after fine-tuning. | Few-shot accuracy metrics, typical classification metrics. |

| Knowledge Transfer | Effective transfer of knowledge across completely different tasks. | Transference is task-specific, learned features can sometimes be reused. | Knowledge transfer is limited to similar tasks. |

| Use of Unlabeled Data | Highly effective; leverages pre-existing knowledge. | Unlabeled data often ignored, focusing on labeled only. | Some algorithms can utilize unlabeled data for semi-supervised learning. |

| Generalization | High generalization capabilities across different scenarios & tasks. | May overfit to the fine-tuning dataset. | Can generalize to a similar task but with limited insight. |

| Transferability | High transferability to new domains or contexts. | Lower transferability, generally domain-specific. | Transferability is task dependent, often limited to close domain tasks. |

| Time to Deployment | Rapid deployment due to no additional training needed. | Longer due to the fine-tuning phase. | Medium duration for preparation & few-shot training. |

| Real-World Usability | Highly usable in fast-evolving scenarios with novel categories. | Usable but often requires extensive pre-training. | Practical for real-time applications but data-limited. |

| Adaptation to New Concepts | Efficiently adapts without prior examples. | Adapts slowly, requiring comprehensive training. | Adapts with a few examples, presuming some base knowledge. |

| Community & Research Interest | High interest due to novel capabilities & applications. | Established research community, methodically developed. | Growing interest as applications expand. |

| Long-term Learning | Potential for continual learning over time. | Limited potential for long-term learning without retraining. | Can learn incrementally with new examples added over time. |

| Ethical Considerations | Requires careful consideration of unintended consequences in deployment. | Ethical deployment with adequate training bias checks. | Ethical challenges present in few-shot information transfer. |

What is Zero-Shot Transfer?

Zero-shot transfer refers to the ability of a model to perform tasks it has never seen before. In machine learning, this involves taking a model trained on one set of tasks & applying it to new tasks without additional training. This approach reduces the need for extensive labeled datasets, making it attractive for various applications.

This method stands out in scenarios where data scarcity limits traditional learning approaches. By utilizing existing knowledge & generalizing it to unfamiliar situations, models can demonstrate impressive flexibility. Zero-shot transfer relies heavily on robust underlying representations, allowing models to infer solutions dynamically.

The architecture of models plays a crucial role in this process. Advanced models can learn generalized features & relationships, which can be reused to tackle new tasks. This phenomenon is particularly useful in domains like natural language processing & computer vision, where labeling data can be prohibitively expensive.

Applications of Zero-Shot Transfer in Machine Learning

The applications of zero-shot transfer span various fields. One prominent area is natural language processing (NLP). In NLP, zero-shot models can understand & respond to inquiries or generate content without explicit examples. This application shows how well the model can comprehend human language nuances.

Another major application lies in computer vision. Models can classify images of previously unseen categories. Doing this without requiring additional training data makes zero-shot transfer highly valuable in scenarios where collecting labeled data is difficult. An example could be recognizing rare animal species in wildlife photography.

Healthcare also benefits significantly from zero-shot transfer. Models can identify patterns in medical images or texts without needing examples from every class. Given the sensitive nature of patient data, reducing the requirement for extensive labeled datasets can streamline the model training process dramatically.

Benefits of Zero-Shot Transfer

The key benefits of zero-shot transfer include efficiency & flexibility. Training a model usually requires vast amounts of labeled data. Be that as it may, zero-shot transfer circumvents this by enabling models to adapt to new tasks instantly. This reduces the time & resources spent on data labeling.

On top of that, the approach helps in enhancing the model’s robustness. Since the model adapts from similar tasks, it develops a broader understanding of the task domain. Consequently, performance improves even without direct supervision or training data tailored for specific tasks.

And another thing, zero-shot transfer encourages innovation in model design. Researchers & developers are pushed to create models capable of leveraging knowledge across diverse domains. This multitasking ability ultimately leads to more powerful & versatile machine learning systems.

Challenges of Zero-Shot Transfer

Despite its advantages, zero-shot transfer is not without challenges. One significant hurdle lies in the need for high-quality representations. If the underlying model does not learn to generalize well, performing accurately on new tasks becomes complex. Models must have sufficient capacity to understand broader patterns.

Another challenge stems from potential domain shift. If the new tasks differ significantly from training data, performance may plummet. The model might struggle to extrapolate correct solutions without adequate contextual knowledge. This issue emphasizes the importance of domain similarity in ensuring successful zero-shot transfer.

Finally, there is the challenge of evaluation. Determining how well a model performs on zero-shot tasks can be complicated. When no labeled data exists for the new tasks, assessing performance accurately remains a significant obstacle in this area of research.

Zero-Shot Transfer vs Traditional Transfer Learning

Zero-shot transfer & traditional transfer learning serve different purposes. In traditional transfer learning, models are trained on a source task & fine-tuned on a target task. This method requires labeled data for the target task, which can negate some of the efficiency benefits.

Conversely, zero-shot transfer models are designed to operate without direct examples in the target domain. This feature allows them to leverage knowledge from a broader set of previous experiences. The absence of specific training data in the target domain sets zero-shot apart.

On top of that, traditional transfer learning often involves retraining a model based on the new domain. This incurs more computational cost & time. In contrast, zero-shot models can quickly adapt to new challenges with minimal overhead, demonstrating their efficiency in rapidly evolving fields.

The Mechanisms Behind Zero-Shot Transfer

Understanding the mechanisms behind zero-shot transfer requires examining how models learn. First, they must acquire high-level features through training on diverse tasks. These features enable the model to relate knowledge from various domains, facilitating transfer when facing new tasks.

Semantic knowledge plays an essential role in this process. Many models use semantic embeddings to capture the relationships between tasks. By translating tasks into a common space, the model can recognize similarities, enhancing its ability to adapt without additional data.

Model architecture also affects zero-shot capabilities. Some architectures allow for better feature extraction from existing data. Consequently, these models can apply learned insights to new tasks effectively. The interplay between architecture & generalization significantly influences the success of zero-shot transfer.

Case Studies of Zero-Shot Transfer in Action

Various case studies demonstrate the application of zero-shot transfer in real-world situations. One notable instance is OpenAI’s GPT-3. This language model can generate coherent text based on prompts it has never encountered. Its capacity to interpret requests is primarily due to its extensive training on diverse data.

Another case is Facebook’s AI, which successfully classified images without seeing them previously. By training on a wide range of datasets, the model learned to generalize understanding across different categories. This ability allows it to identify objects & scenes effectively, demonstrating zero-shot capabilities.

In healthcare, researchers have applied zero-shot transfer to predict disease outcomes from genetic data. This innovative approach allows pathologists to identify potential disease indicators without needing comprehensive datasets for every possible disease. Such attempts highlight the practical benefits zero-shot transfer provides in critical fields.

Zero-Shot Learning Techniques

Zero-shot learning employs several techniques to enhance performance & efficiency. One method is the use of semantic embeddings, which represent tasks in a shared space. This allows the model to infer relationships & apply knowledge to new challenges.

Another approach involves generative models. These models can create synthetic data points based on learned features. By generating examples for unseen classes, models can effectively train on these synthetic samples. This technique enhances the adaptability of the model.

And don’t forget, using external knowledge sources can boost zero-shot learning capabilities. Some models incorporate knowledge graphs or external databases to provide contextual understanding. By leveraging existing knowledge, these models enhance their performance in zero-shot scenarios.

Future Trends in Zero-Shot Transfer

The future of zero-shot transfer appears promising. Advances in model architecture & learning algorithms are likely to enhance capabilities. Researchers are exploring more effective ways to optimize embeddings & improve generalization.

Similarly, integration with other machine learning techniques presents opportunities for growth. Combining zero-shot transfer with reinforcement learning or unsupervised learning could yield models with even greater adaptability. This could open new applications across various sectors.

Incorporating user feedback into zero-shot models also presents a significant trend. By allowing models to learn from user interactions, the adaptability of zero-shot systems may increase. This aspect emphasizes the importance of continual learning & improvement in real-world applications.

Real-World Impact of Zero-Shot Transfer

The real-world impact of zero-shot transfer continues to grow. Businesses are adopting these methods to reduce costs associated with data labeling. In sectors like e-commerce, companies can efficiently classify products without needing extensive labeled datasets.

And another thing, zero-shot capabilities drive faster innovation cycles. With models able to tackle new tasks promptly, products & services can evolve quickly based on changing market needs. This capability fosters a competitive edge in tech-driven industries.

On top of that, industries such as finance benefit from zero-shot capabilities in fraud detection. Without needing labeled examples for every emerging fraud type, models can adapt & learn from anomalies in transaction data. This flexibility enhances security measures & protects users.

The Role of Language Models in Zero-Shot Transfer

Language models play a pivotal role in the effectiveness of zero-shot transfer. These models can generalize understanding across tasks & contexts, demonstrating significant flexibility. For instance, they can perform sentiment analysis on textual data without specific training for that task.

On top of that, advancements in transformer-based architectures have propelled the success of zero-shot techniques. These architectures excel in capturing long-range dependencies & contextual understanding, fundamental for generalizing task performance effectively. They have become a standard in NLP applications.

Consequently, the ongoing development of language models presents exciting prospects for zero-shot transfer. As these models improve, their capabilities to understand & process multiple tasks without previous exposure will continue to expand, enhancing their utility across sectors.

The Importance of Data Quality in Zero-Shot Transfer

Data quality significantly affects zero-shot transfer performance. High-quality data ensures that models learn valuable representations, which are critical for accurate generalization. Without a strong foundation, the model’s ability to apply learned knowledge diminishes.

And don’t forget, noise in training data can lead to incorrect assumptions. If models learn from flawed datasets, they may struggle to interpret new tasks accurately. Ensuring clean, well-structured data is crucial for successful zero-shot applications.

Lastly, understanding the complexity of the data domain plays a role. Training on a diverse array of tasks improves the model’s adaptability. As such, researchers should prioritize data diversity & quality to maximize zero-shot learning benefits.

Table: Key Differences Between Zero-Shot Transfer & Traditional Transfer Learning

| Aspect | Zero-Shot Transfer | Traditional Transfer Learning |

|---|---|---|

| Data Requirement | No target task data | Requires target task data |

| Flexibility | Very flexible | Moderately flexible |

| Efficiency | Highly efficient | Less efficient |

My Experience with Zero-Shot Transfer

In my experience working with machine learning, I applied zero-shot transfer during a project. While developing a chatbot, I noticed the importance of training it on diverse examples. Without sufficient labeled data, leveraging a pre-trained language model allowed it to comprehend user queries efficiently.

This practical application showcased the effectiveness of zero-shot approaches. The model quickly adapted to various inquiries & provided relevant responses. Observing this flexibility reinforced the potential of zero-shot transfer in real-world applications.

Using zero-shot transfer expanded the chatbot’s capabilities significantly. It led to a better user experience while minimizing the time spent on data preparation. This successful project illuminated the profound impact of zero-shot learning in machine learning methods.

Future Research Directions in Zero-Shot Transfer

Future research will explore various avenues to improve zero-shot transfer. Focus will be on enhancing model architectures to support more robust generalization techniques. Researchers aim to design models capable of learning & adapting on-the-fly efficiently.

And another thing, exploring hybrid models combining zero-shot with supervised & semi-supervised learning presents opportunities. Such combinations could result in systems that leverage limited labeled data effectively while maintaining zero-shot capabilities.

On top of that, investigating the implications of zero-shot transfer across languages & cultures will be essential. As global communication evolves, ensuring that models can adapt their understanding to diverse contexts will broaden their applicability. This trend will open new research pathways & insights into zero-shot methodologies.

Table: Challenges in Zero-Shot Transfer

| Challenge | Description |

|---|---|

| High-Quality Representations | Need for robust feature extraction |

| Domain Shift | Difficulty adapting to new domains |

| Performance Evaluation | Challenges in assessing accuracy |

The Intersection of Zero-Shot Transfer & Artificial Intelligence

Zero-shot transfer aligns closely with current trends in artificial intelligence (AI). As AI continues to evolve, the demand for adaptable systems grows. Zero-shot techniques offer a complementary approach to traditional training methods, enhancing AI’s ability to solve diverse problems.

On top of that, zero-shot learning integrates seamlessly with existing AI frameworks. By embedding zero-shot principles into AI processes, developers can expedite the deployment of adaptable systems. This integration ensures that AI remains at the forefront of technological advancement.

Finally, as industries adopt AI at an increasing rate, the relevance of zero-shot transfer will only grow. Organizations will seek efficient solutions that require minimal resources while maintaining high accuracy. Zero-shot transfer offers a practical response to these evolving demands.

Final Thoughts on Zero-Shot Transfer in Machine Learning

Zero-shot transfer represents a transformative approach in machine learning. Its ability to adapt without extensive labeling facilitates innovation & efficiencies across sectors. As technology continues to advance, this method will likely play a pivotal role in shaping the future landscape of machine learning & artificial intelligence.

What is Zero-Shot Transfer?

Zero-Shot Transfer refers to the ability of a machine learning model to make predictions on tasks or categories that it did not encounter during training. This approach leverages the knowledge that the model has acquired from different but related tasks.

How does Zero-Shot Transfer work?

Zero-Shot Transfer works by utilizing semantic understanding & relationships between different categories. Models trained on a set of tasks can apply learned knowledge to new tasks using attributes or descriptions that generalize across domains.

What are the benefits of Zero-Shot Transfer?

The benefits of Zero-Shot Transfer include reducing the need for extensive labeled data, enabling models to adapt to new tasks quickly, & facilitating the handling of rare or unseen classes without the requirement for retraining.

In which scenarios is Zero-Shot Transfer most useful?

Zero-Shot Transfer is especially useful in situations where data is scarce, such as in medical diagnosis, language processing, & any domain where collecting labeled examples is costly or impractical.

What are some challenges of implementing Zero-Shot Transfer?

Challenges include ensuring that the model has been trained on sufficiently related tasks, dealing with potential inaccuracies in attribute representation, & managing the limitations of the model’s understanding of novel concepts.

How does Zero-Shot Transfer impact model performance?

Zero-Shot Transfer can enhance model performance by allowing it to generalize knowledge, but it may also lead to reduced accuracy if the model cannot effectively relate the new task to its existing knowledge base.

What role do embeddings play in Zero-Shot Transfer?

Embeddings serve as a bridge in Zero-Shot Transfer by encoding semantic information about different tasks & categories, thus allowing the model to map new tasks into the learned space effectively.

Can Zero-Shot Transfer be used in natural language processing?

Yes, Zero-Shot Transfer is widely used in natural language processing to understand & generate text about topics or categories not directly trained on, benefiting applications like sentiment analysis or topic classification.

How does Zero-Shot Transfer compare to traditional transfer learning?

Unlike traditional transfer learning, which requires some labeled examples of the new task, Zero-Shot Transfer allows models to operate on completely new tasks without any specific training on those categories.

What advancements have been made in Zero-Shot Transfer techniques?

Advancements in Zero-Shot Transfer include improved semantic modeling, better representation learning techniques, & the development of sophisticated architectures that can efficiently leverage different types of information for unseen tasks.

Conclusion

In summary, zero-shot transfer is a powerful technique in the field of machine learning that allows models to make predictions on tasks they haven’t been explicitly trained for. This means that with zero-shot transfer, AI can leverage knowledge from one area to handle new situations more effectively. As we continue to advance technology, understanding & using zero-shot transfer can lead to smarter, more adaptable machines. Its impact on machine learning is significant, offering new possibilities in various applications. Embracing this approach can help drive innovation & efficiency in AI systems.

Purchase From Official